Azure DataBricks talking to Azure SQL Database using Private Endpoints

At work a colleague reached out asking for some help with getting some python code querying an Azure SQL Server database and getting it all working. This is right up my street, fixing things I don’t use on a day to day basis is something of a challenge I just love working on.

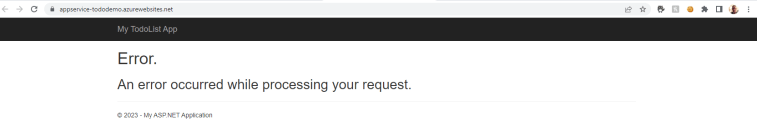

I will list out the steps in order to achieve this, bare in mind we have Azure SQL deployed as well as Azure DataBricks at this point and when we try to query the Azure SQL Database we see errors like the ones below:-

“com.microsoft.sqlserver.jdbc.SQLServerException: The TCP/IP connection to the host <redacted>.database.windows.net, port 1433 has failed. Error: “connect timed out. Verify the connection properties. Make sure that an instance of SQL Server is running on the host and accepting TCP/IP connections at the port. Make sure that TCP connections to the port are not blocked by a firewall.”.

as well as this one

com.microsoft.sqlserver.jdbc.SQLServerException: Cannot open server “<server name redacted>” requested by the login. The login failed. ClientConnectionId:c5977705-8b83-4f12-a4ce-0268ac868798

Ok so reading these errors might mean you look into whitelisting IP addresses.

Lets write the steps down to fix this issue and maybe it will help someone, probably me when I forget I wrote this blog next week 🙂

Ok so we did the following steps:-

- Added a new Subnet to our databricks-vnet

- Find your Azure SQL Server instance in the portal, go to the Networking tab and clicked Private access, click the + to Create a Private Endpoint, on the Virtual Network tab choose the Virtual network your using for DataBricks and select the new Subnet we want to use. Make sure to keep ‘Integrate with Private DNS Zone’ ticked.

- Once the Private Endpoint has been created click on it and go to DNS Configuration, click on the link towards the bottom under the heading Private DNS Zone to be taken to your Private DNS Zone. Now click on ‘Virtual network links’. Again click the + to add a new Virtual Network Link and choose the DataBricks VNet, don’t tick Enable auto registration.

So it s just like any other Private Endpoint config, just remember to do the Virtual Network link. You also don’t need to whitelist an IP Addresses or anything like that.

Don’t forget to subscribe to my YouTube Channel. And my Newsletter